People are having feelings about AI-generated imagery.

I’ve been meaning to outline some of my thoughts on the subject and present a the way I believe AI can fit into image creation. Personally, I find it useful, and I’m going to describe some of the effects I think it is having (and will have) on the world and the way people think about creating imagery. Even as the models improve, I think the effect will be roughly the following:

More people will generate images “casually” (i.e. adding a little personalized image to a birthday message)

“Serious” craftspeople will use it to augment parts of their workflows

While it’s possible that eventually it will get so good that people will be able to entirely skip the workflow bit, and even the “serious” people will just generate something that they’re immediately happy with, I find it more likely that what a workflow is will adapt to this new reality.

A comparison.

The smartphone put photography within reach of almost everyone. That was a technological shift that allowed people to flood the world with mostly artless photos. And I don’t say “artless” pejoratively! Most people don’t take photos with “artistic intent” (we can have a debate about what that means another time). Many smartphone photos, including thousands of my own, could be validly referred to as smartphone slop: bad photographs wasting storage and energy. The person taking the photo gets something out of it though, whether it’s short term amusement, some kind of exploration, a memory, or something to use to connect with another person.

We could also talk endlessly about the debates through the ages on whether or not photography even is art, how it fits into capitalism, or shifts perceptions of reality. Feel free to see The Work of Art in the Age of Mechanical Reproduction to start reading up on the subject. All of these are questions that are coming up again today, as they did through the development of photography as a craft, art, and industry.

Speaking of photography as an industry, I’d say the smartphone created a massive shift in the landscape with two major effects:

Some people who otherwise may have hired a photographer did not.

A lot (really a lot) of photographs that never would have been taken were taken.

We stopped paying a segment of the industry, and we flooded the market with low effort alternatives specifically designed to replicate styles, techniques, and media used by professional photographers.

To the seasoned photographer, though, the smartphone is another tool they can make use of, even if they’d get better results out of their “real” camera. I take a ridiculous number of photos with my phone, sometimes with artistic intent, and sometimes just because I want a memory of that moment.

Some of my favourite smartphone shots over the last year. I like to think that my experience with “real” photography helped me get better smartphone shots.

My slop. Just random stuff that I want to remember. Not great photos. Just fun things I might send someone, post online, or keep for myself.

I believe we’re going to see similar things happen with other creative industries. At the “lower end” of the industry, some artists who perhaps offered cheaper services for lower quality work may lose gigs because people can choose to just generate something themselves rather than pay.

I think we’ll also see a lot of people who have intimate knowledge of different visual crafts start to use the tools to help them move faster: most of art exists under market constraints, after all. However, these people will use the tools differently. They won’t just generate imagery, but use the tools to help guide their process or automate steps they don’t love.

Lately, I've found myself doing exactly this. I use AI tools to brainstorm, plan, and help me generate artifacts that I use in my work (written, coded, visual, etc.).

In practice.

About a week ago, I was trying to generate a logo and some branded imagery for this newsletter.

At first I decided to generate something with Nano Banana Pro because all the cool kids were talking about it, and I actually had some pretty good results toying with it previously. I really enjoyed ideating with it for the newsletter branding, but in the end I didn’t like the image it generated:

An icon for the newsletter

The visual quality is adequate in broad strokes, but while it looks nice at a glance, it doesn’t fully make sense when you dig into the details. That’s often the case with AI-generated imagery.

So I decided to go a very different route.

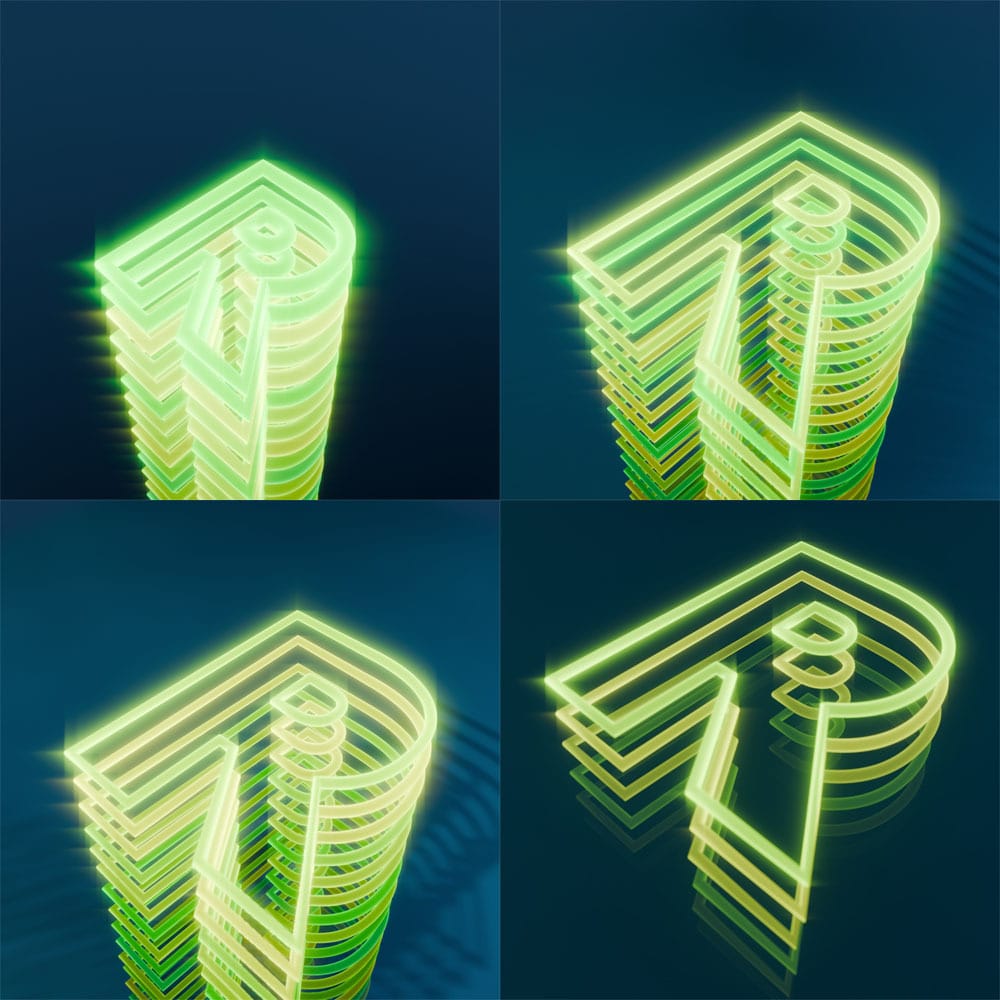

I have a background in graphical software packages for 3D and image editing. I don’t know Blender very well, but I can stumble through it pretty decently using my knowledge of other tools, and I have a Photoshop subscription. So I asked the ChatGPT Codex CLI to do something perhaps a bit unusual: to write some Python to generate a Blender scene for me. I described roughly how I would make the scene and it mostly worked.

After a few iterations with Codex, I eventually hit a point of diminishing returns, at which point I took over. I made some changes to the fog effects, shaders, renderer, and got what I needed.

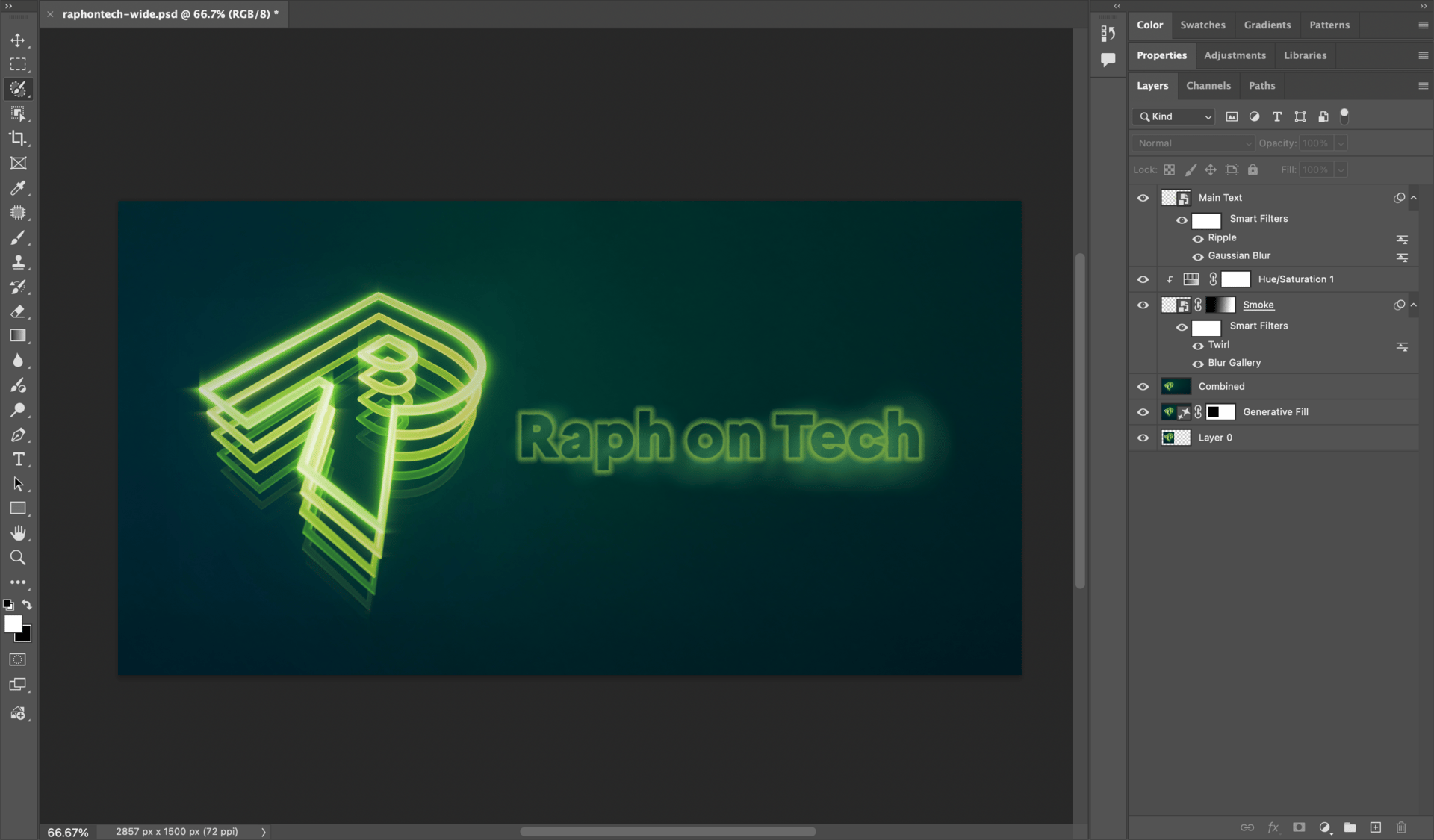

After I completed this bit, I shoved the image into Photoshop, extended it horizontally using Photoshop’s AI-powered generative fill, made some manual adjustments, and added some effects using my hard-earned Photoshop skills (honed since ~2002):

Not the most complicated setup, but probably beyond the scope of beginner.

I’m convinced that the intersection of the AI tools and my prior knowledge is what enabled me to create this image as quickly as I did. Let me reiterate: the AI didn’t create the image for me, I guided it very specifically and had it translate my knowledge into tasks for the AI to accomplish with the tools with which I was less familiar.

What it means.

Smartphone cameras didn’t destroy the art of photography, they just made it more approachable, accessible, and consumable. I think AI-generated images have a similar effect on other forms of visual creation.

I wanted to highlight the workflow I developed to create this image because I think it demonstrates how the intersection of understanding a craft combined with the use of AI tools is really where there’s an elevating effect: I never would have bothered to make an image like this without these systems. I no longer have a license to the 3D tools I know well, and even with those, I probably wouldn’t have taken the time to do something like this for a small side-project. It took me a bit over an hour. I think it would probably have taken me four or five if I had to teach myself the notoriously awkward Blender interface, and the intricacies of the tool. Instead, I was able to use my knowledge of the craft, apply it to something that was less known to me, and create something that I’m genuinely happy with. The alternatives of using either pure AI, or spending the same amount of time with no AI at all, would both have led to half-baked results. The tools didn’t replace my craft, or take away someone else’s, they enabled me to do more than I previously could.